Mon, Jun 5, 2023

Mon, Jun 5, 2023Replit AI Manifesto

Amjad Masad, CEO: I'm excited to welcome Michele Catasta to the Replit team as our VP of AI. Michele joins us from Google, where he was Head of Applied Research at Google Labs and, before that, Google X, where he researched Large Language Models applied to code. Michele has a Ph.D. in Computer Science and was Research Scientist and Instructor in AI at Stanford University. This manifesto is a culmination of a conversation Michele and I have had for over a year on the future of AI and its impact on the software industry: “People who are really serious about software should make their own hardware.” [1982] More than 40 years later, Alan Kay’s quote still sounds as relevant as it gets – no wonder he is recognized as one of the most influential pioneers of our industry. As a matter of fact, over the past few decades software and hardware worked in conjunction to dramatically augment human intelligence. Steve Jobs celebrated Alan’s quote in his famous 2007 keynote, right after launching the very first iPhone – possibly one of Steve’s mottos, considering how Apple built its reputation and massive success story on unprecedented software/hardware integration. At Replit, our mission is to empower the next billion software creators. Our users build software collaboratively with the power of AI, on any device, without spending a second on setup. We accomplished that by creating from the ground up a delightful developer experience. The Replit development environment allows our users to focus solely on writing software, while most of the hardware (and Cloud computing) complexities are carefully hidden away.

Wed, May 31, 2023

Wed, May 31, 2023Replit Builders Series - NodePad: From Idea to MVP with Replit Deployments

Replit is where builders come to bring their software and app ideas to life, fast. We’re showcasing builders and software they created and deployed on Replit. NodePad is a platform for idea exploration driven by AI. NodePad founder Saleh Kayyali went from designer to consumer app founder. The app he built and deployed end to end on Replit has thousands of users and slick UI. It shot to #5 on HackerNews, and yet he’s never been paid to develop software before. How?

Mon, May 29, 2023

Mon, May 29, 2023Configurable Keybindings for the Workspace

We recently launched customizable keyboard shortcuts to supercharge the workspace for every Replit user. This makes Replit more powerful for everyone, from beginners to experts, by unlocking the ability to perform more actions in the workspace without leaving your keyboard. Defining all the actions available to a user in a keybindings system also increases the discoverability of lesser-known features. Finally, the increased customization handles the challenge of building for many types of users working in different environments. How keybindings work To see all the keybindings available to you, you can head to the Settings tab in the workspace. Your keybinding settings follow you across Repls, browsers, and devices with no work required. Search for a keybinding by name, description, or simply the keybinding itself by pressing the “Record” button to the right of the search bar. Changes to your shortcuts are saved instantly, and the UI updates accordingly to handle your new keybinding settings.

Thu, May 25, 2023

Thu, May 25, 2023Super Colliding Nix Stores: Nix Flakes for Millions of Developers

We’ve teamed up with Obsidian Systems and Tweag to enable Nix to merge multiple (possibly-remote) Nix stores, bringing Nix Flakes and development environment portability to millions of Replit users. Nix is an open-source cross-platform package manager and build tool that lets you access the most up-to-date and complete repository of software packages in the world. Nix’s approach to package building ensures that software and development environments will always work the same way, no matter where it is deployed. Replit has bet big on Nix because we believe in a future where developers are free from the drudgery of setting up their development and production environments. A world where onboarding a co-worker is as simple as forking a Repl. When you use Nix to describe your environment, you don’t have to redo your setup everywhere you want your code to work. As Mitchell Hashimoto, founder of Hashicorp, said: one big benefit is that once you adopt Nix, you can get a consistent environment across development (on both Linux and Mac), CI, and production.

Fri, May 19, 2023

Fri, May 19, 2023May 18 Replit downtime

Yesterday, we had a period of about 2h from 11:45 to 13:56 (Pacific Time) in which all users were unable to access their Repls through the site. We have addressed the root cause, and the system is now operating normally. We know a two-hour downtime is not acceptable for you or your users. This post summarizes what happened and what we're doing to improve Replit so that this does not happen again. Technical details Here is the timeline of what happened, all times in Pacific Time: In 2021, we changed the way that configuration is pushed to the VMs that run Repls and streamlined multiple kinds of configurations. During this migration, a latent bug that we had not hit was introduced. When we tried to see if a configuration kind had been updated, we acquired a Golang RwMutex for reading, but if there was not a handler for that configuration kind, the read-lock would not be released. Golang's RwMutex are write-preferring, so acquiring multiple read-locks is allowed as long as there are no goroutines attempting to acquire a write-lock. When that happens, it will cause any future read-locks to block. On May 16, 2023,we introduced a new configuration kind, but we did not add a handler, which means that from this point on we were leaking read-locks, but the system was able to make progress.

Sun, May 14, 2023

Sun, May 14, 2023Making Git Good

Improving Git on Replit There has been a new Git pane in Repls for a little while now. This new Git UI you see is a part of a complete rewrite of everything Git-related on Replit: both engineering and design. It is the start of our journey into more deeply integrating Git into Replit, with much more to come. Let's talk about it!

Mon, May 8, 2023

Mon, May 8, 2023Recapping the SPC-Replit AI Hackathon

This is a guest post by South Park Commons. SPC is a community of 500+ builders, technologists, and domain experts with locations in San Francisco and New York City. The recent SPC-Replit AI hackathon brought together talented builders from the SPC community and Replit network to show off their technical chops and creativity by building cutting-edge AI applications. Participants had a week to develop a working demo that incorporated AI in some capacity and was built on Replit. From meal planning to baby-AGI's and even a bot that points out logical fallacies, these projects are proof of the limitless potential of AI. Check out the individual project highlights below! Dialectic - Improving Conversational Health - 1st Place 🥇 Team: Somudro Gupta & Casey Caruso

Fri, May 5, 2023

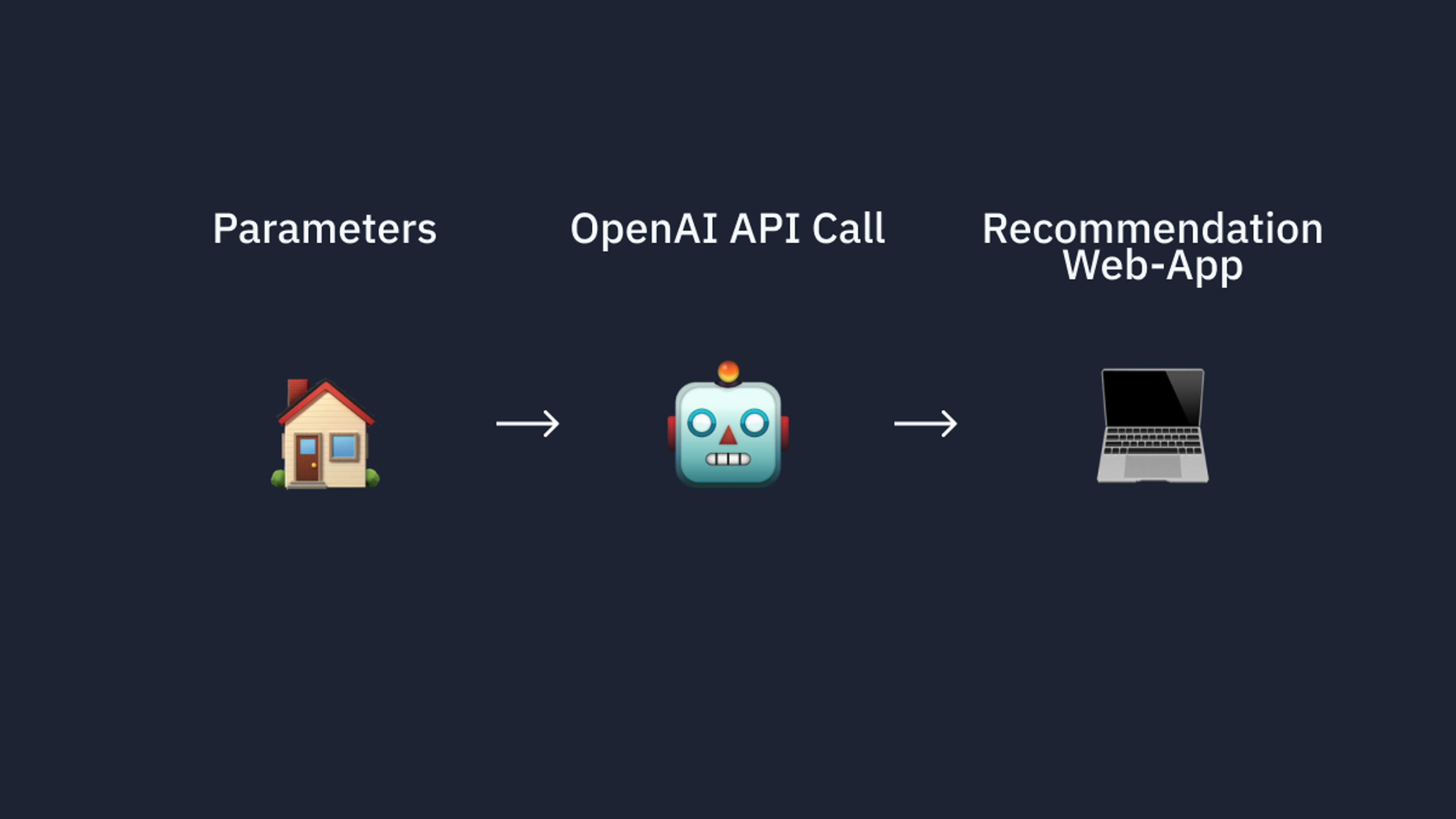

Fri, May 5, 2023Replit Bounties: AI-Powered Real Estate Recommendation Webapp

Problem Statement Christo Hefer is Founder and COO at Symplete, a real estate technology platform for agents to handle marketing and sales and help agents select the best purchase agreements for their listings. He wanted to create a prototype where a residential purchase agreement could be uploaded in PDF format and analyzed using OpenAI’s GPT-3 API. Based on 15-20 different fields as inputs, the best purchase agreement should be recommended to the agent. Building the Prototype To do this, the Bounty Hunter Syed (Repit username: cudanexus) used a Python Library to read the residential agreement PDF before sending it to GPT-3 to analyze. After that, he used the GPT-3 API to analyze the document and present the real estate agent with the optimal agreement for their listing. The parameters used included the address, price and offer expiry dates amongst others.

Mon, May 1, 2023

Mon, May 1, 2023Announcing Replit Extensions

At Developer Day, we revealed our commitment to increasing the extensibility of Replit to better serve the diverse needs of our community of makers and all developers. And today, we’re excited to announce a major milestone in our mission to empower a billion developers: the launch of Replit Extensions. Extending the Replit Environment Makers have an ever-present desire to have control over their tools. This is true in every creative domain, be it software, music, photography, or painting. What makes software unique is that much of the tooling is itself software, and with any mastery of software, you can begin to shape your tools to your needs. Making Replit extensible has been at the top of our minds since the beginning. We've been busy at work—we've iterated on our core primitives, our layout system, refactored our code towards modularity, allowed people to configure their Repls, and even replaced our code editor with one that's far more composable, with extensibility as one of the driving reasons.

Mon, May 1, 2023

Mon, May 1, 2023Copyright Law in the Age of AI: The Role of Licensing in Replit's Development

Thousands of unique users code in Replit every day. Few of them probably give much thought to the copyright that attaches to their code. They might be surprised to learn that copyright – specifically, the way that Repls are licensed – is fundamental to the way that Replit works. As the AI software revolution proceeds apace, copyright law is again playing a fundamental role in how it develops – and in how Replit brings the benefits of this technology to our users. We’ll explain all in this blog post. But before we get there, time for a history lesson. A brief history of software licensing The relationship between copyright and computer software wasn’t always obvious, even to lawyers. Early versions of the Unix operating system, developed at Bell Labs in the 1970s, were distributed freely along with their source code, so that the recipients of the code could hack on it. Decades later this led to a copyright lawsuit, but following its settlement, entirely free distributions of Unix became legally available, with their components licensed under free software licenses such as the MIT license.

Sun, Apr 30, 2023

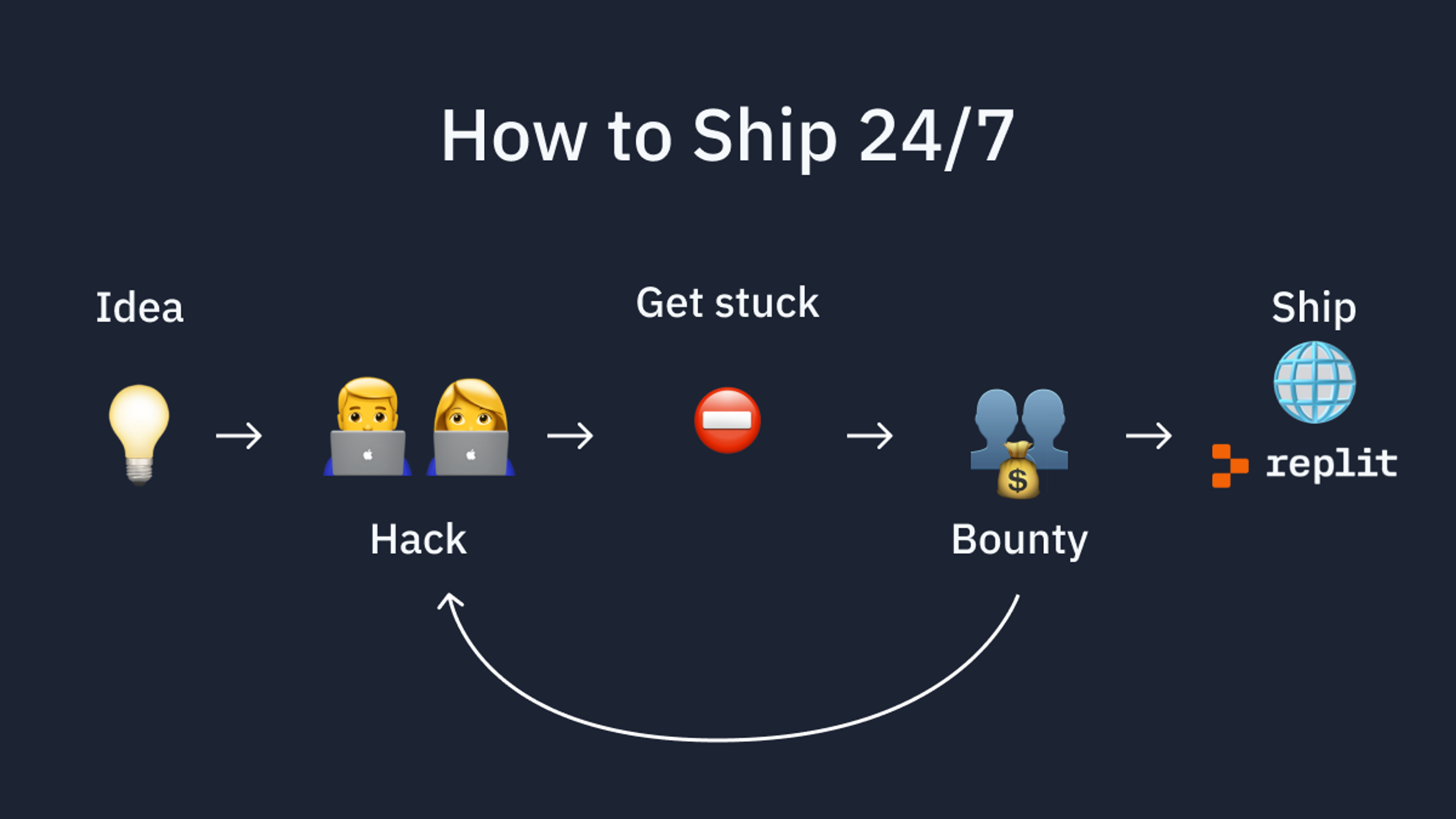

Sun, Apr 30, 2023Replit Bounties: Ship Code in Your Sleep

"Through Bounties, I was able to get high-quality developers that are able to fulfill a problem statement really fast. Replit’s Bounty Hunters ensure that the client is happy with the end product and have a high quality of work product.. and are interested in making the best versions of the apps possible." @girlbossintech | Software Engineer Introduction @girlbossintech is a software developer and Twitter creator. She wanted to test out an idea for a social polling app, similar to popular polling web apps like Gas App, where users would be served a question and could select an option, which would then take them to the next question. However, being busy with work, she decided to outsource the implementation of this idea. After seeing Replit Bounties on Twitter and receiving a recommendation from a friend, @girlbossintech decided to try it out. Building the Prototype

Tue, Apr 25, 2023

Tue, Apr 25, 2023A Recap of Replit Developer Day

At Developer Day, we announced: Production-grade Deployments straight from your IDE A more powerful Workspace Secure Workspace Extensions Replit’s From-Scratch Trained Code Complete model Production-grade Deployments Straight from your IDE

Tue, Apr 25, 2023

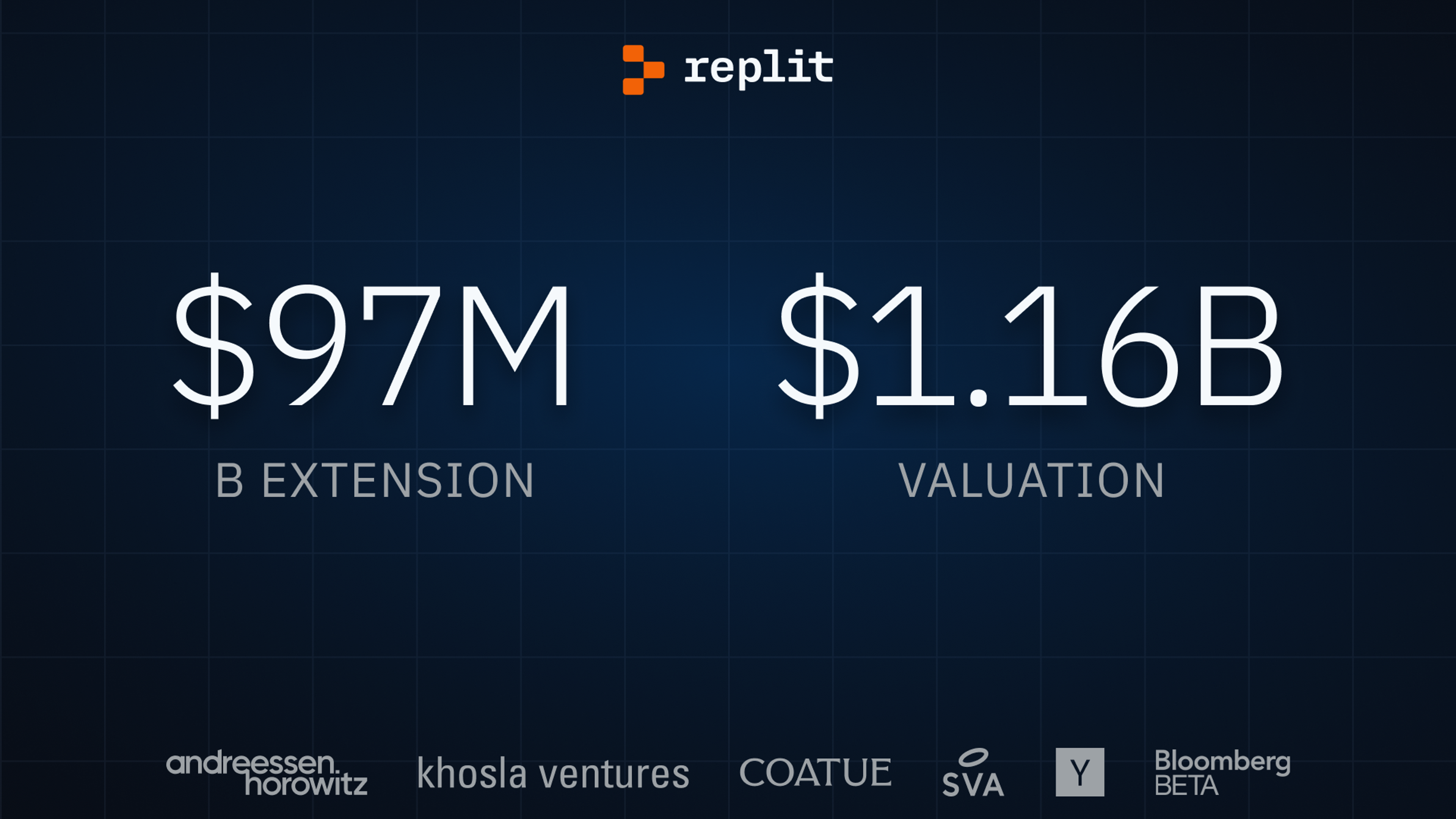

Tue, Apr 25, 2023Raising $97.4M at $1.16B Valuation to Expand our Cloud Services and Lead in AI Development

Note: The press release for our funding announcement can be found below. You can find the original press release here. Replit is Building the Best Cloud and AI Development Experience for Software Creators Replit, the world's fastest-growing developer platform and creator of Ghostwriter, the generative AI for software development, announced the close of its $97.4 million financing round at a $1.16 billion post-money valuation. Replit will use the new funds to innovate on its core development experience, expand its cloud services for developers, and drive innovation in AI and LLMs through Ghostwriter. Investor Participation and Funding Details

Tue, Apr 18, 2023

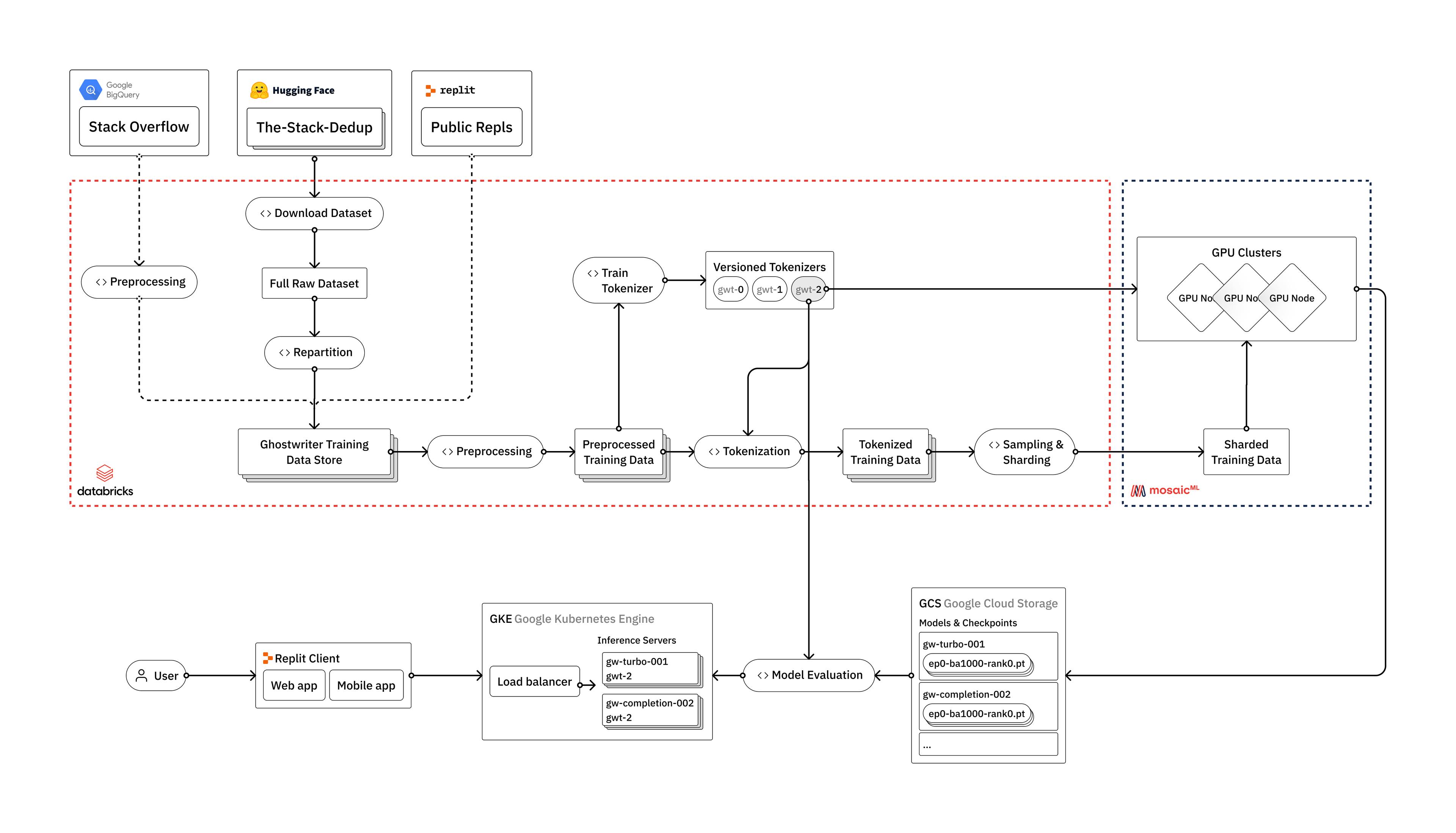

Tue, Apr 18, 2023How to train your own Large Language Models

Learn how Replit trains Large Language Models (LLMs) using Databricks, Hugging Face, and MosaicML Introduction Large Language Models, like OpenAI's GPT-4 or Google's PaLM, have taken the world of artificial intelligence by storm. Yet most companies don't currently have the ability to train these models, and are completely reliant on only a handful of large tech firms as providers of the technology. At Replit, we've invested heavily in the infrastructure required to train our own Large Language Models from scratch. In this blog post, we'll provide an overview of how we train LLMs, from raw data to deployment in a user-facing production environment. We'll discuss the engineering challenges we face along the way, and how we leverage the vendors that we believe make up the modern LLM stack: Databricks, Hugging Face, and MosaicML. While our models are primarily intended for the use case of code generation, the techniques and lessons discussed are applicable to all types of LLMs, including general language models. We plan to dive deeper into the gritty details of our process in a series of blog posts over the coming weeks and months. Why train your own LLMs?

Tue, Apr 11, 2023

Tue, Apr 11, 2023Replit Deployments - the fastest way from idea → production

After a 5 year Hosting beta, we're ready to Deploy. Introducing Replit Deployments Today we’re releasing Replit Deployments, the fastest way to go from idea to production in any language. It’s a ground up rebuild of our application hosting infrastructure. Here’s a list of features we’re releasing today: Your hosted VM will rarely restart, keeping your app running and stable. You’re Always On by default. No need to run pingers or pay extra.