AI-generated code is changing how software is built, but securing that code raises new challenges. This research explores whether AI-driven security scans are sufficient for vibe coding platforms, or whether they risk asking models to audit their own output.

Through controlled experiments on React applications with realistic vulnerability variants, we compare AI-only security scans with Replit’s hybrid approaches that combine deterministic static analysis and dependency scanning with LLM-based reasoning. Along the way, we examine how prompt sensitivity, nondeterminism, and ecosystem awareness affect real-world security outcomes.

We show that functionally equivalent code can receive different security assessments depending on syntactic form or prompt phrasing. Issues like hardcoded secrets may be detected in one representation and missed in another. More critically, dependency-level vulnerabilities and supply-chain risks remain largely invisible without traditional scanning infrastructure.

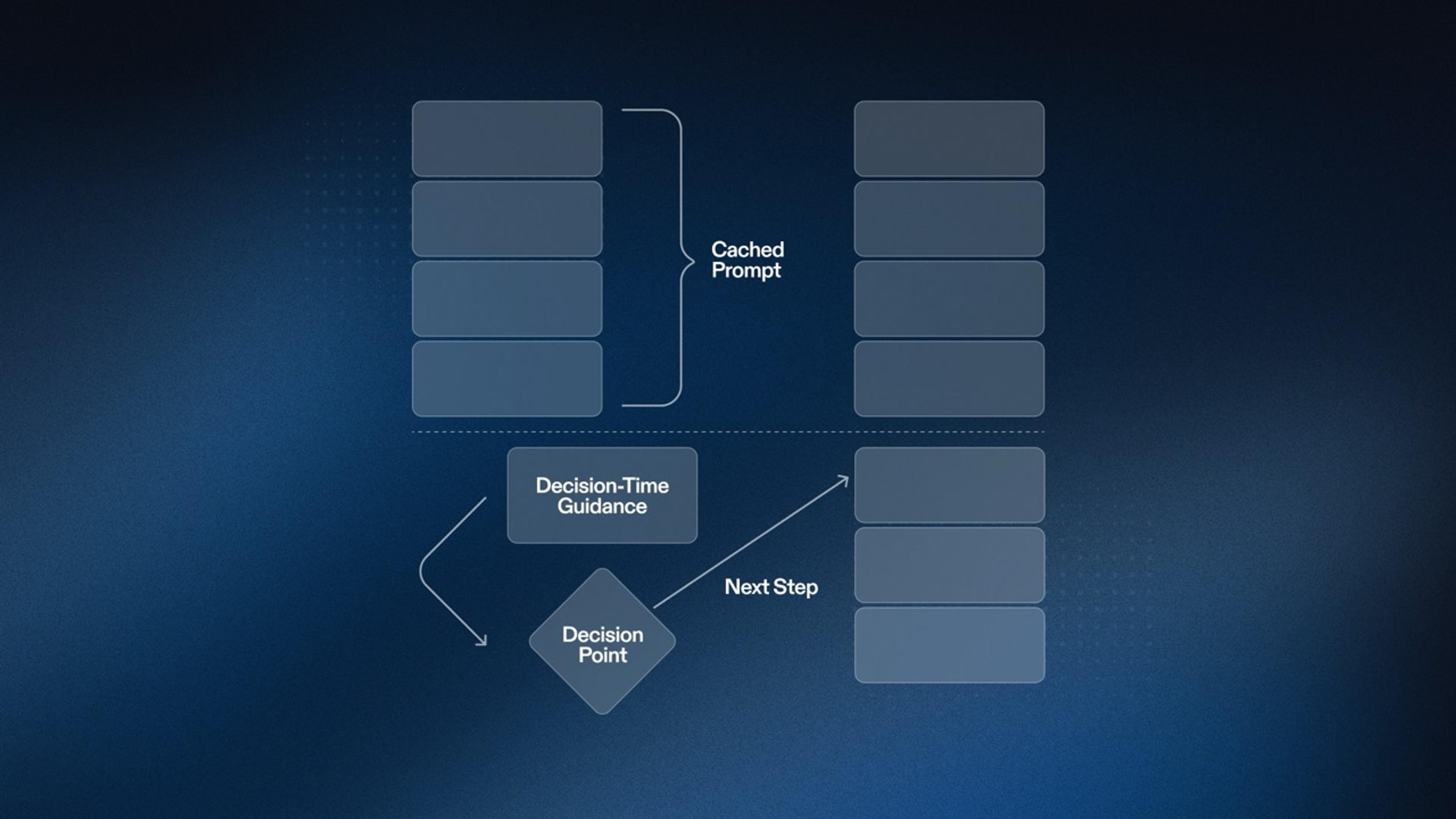

The takeaway is not that LLMs are ineffective, but that they are best used alongside deterministic tools. While LLMs can reason about business logic and intent-level issues, static analysis and dependency scanning are essential for establishing a reliable security baseline.

Key Findings:

- AI-only security scans are nondeterministic: Identical vulnerabilities receive different classifications based on minor syntactic changes or variable naming

- Prompt sensitivity limits coverage: Detection depends on what security issues are explicitly mentioned, shifting responsibility from tool to user

- Dependency vulnerabilities go undetected: Without continuous vulnerability feeds, AI cannot reliably identify version-specific CVEs

- Static analysis provides consistency: Rule-based scanners deliver deterministic, repeatable detection across all code variations

- Hybrid architecture is essential: Combine deterministic baseline security with LLM-powered reasoning for comprehensive protection

If you’re interested in the methodology, experiments, and detailed analysis behind these findings, read the full white paper.