AI agents are here to stay

Large Language Models (LLMs) are everywhere, doing various jobs, from chatting to parsing documents. Soon after LLMs took the world by storm, developers started creating more focused, goal-oriented LLM apps modeled after human reasoning and problem-solving, which became known as AI agents.

Here’s how one company is leveraging LangChain and Replit to solve complex tasks.

AI agents with CrewAI

CrewAI is a library specifically designed to build and orchestrate groups of AI agents. It's made to be straightforward and modular, so integrating it into your projects is a breeze. Think of CrewAI like a set of building blocks - each piece is unique, but they're all designed to fit together smoothly. Built on top of LangChain, it's inherently compatible with many existing tools, including local open-source models through platforms like Ollama.

The AI agents can run natively on the cloud with Replit, making it even easier to get started with CrewAI. Check out the CrewAI templates on Replit to see the agents in action. Or, use them as a starting point for your own projects so you don't have to start from scratch. Here’s how it works.

How CrewAI works

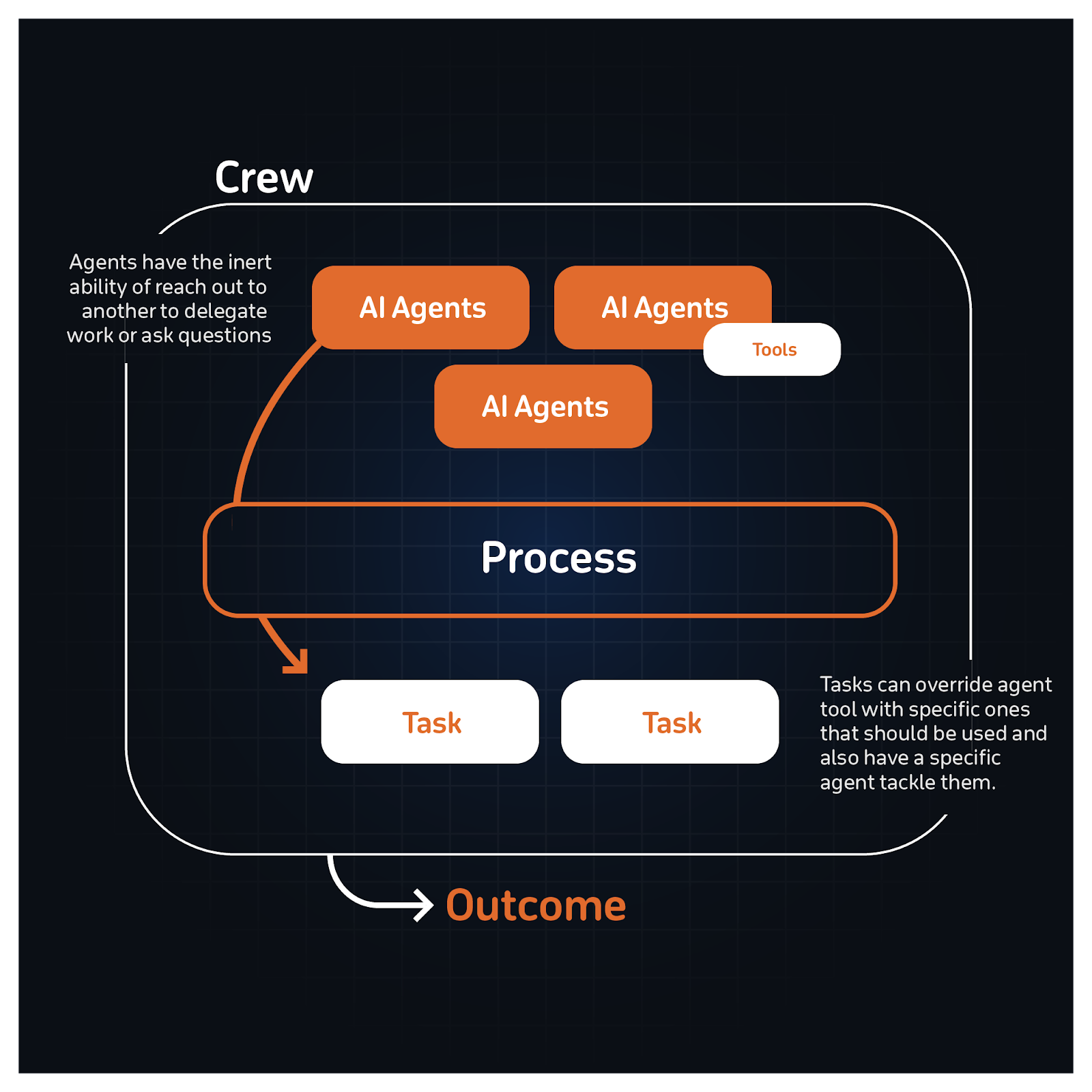

CrewAI has four main building blocks: Agents, Tasks, Tools, and Crews.

- Agents are your dedicated team members, each with their role, backstory, goal, and memory.

- Tasks are small and focused missions a given Agent should accomplish.

- Tools are the equipment our agents use to perform their tasks efficiently.

- Crews are where Agents, Tasks, and a Process meet. This is the container layer where the work happens.

CrewAI is built on LangChain, enabling developers to equip their agents with any of LangChain's existing tools and toolkits.

For example, you could use LangChain’s Gmail Toolkit to enable a crew of AI agents to sort through your inbox and help you write an email after they've done some research. AI agents built with CrewAI support a considerable spectrum of use cases, and the vision is to keep expanding on top of LangChain, allowing you to plug in other components.

Because CrewAI is built on top of LangChain, you can easily debug CrewAI agents with LangSmith. When you turn on LangSmith, it logs all agent runs. This means you can quickly inspect (1) what sequence of calls is being made, (2) what the input to the calls is, and (3) what the output of those calls is. Many of those calls are calls to LLMs - which are non-deterministic and can sometimes produce surprising results. Having this level of visibility into your CrewAI is helpful when trying to make them as performant as possible.

Try CrewAI

Try the following templates to build your own Crew and see it in action. They contain functional AI agents and crews, each with their own set of goals that you can fork, customize, and test yourself.

- Trip planning assistant

- Building a landing page from a one-line idea

- Individual stock analysis (Disclaimer: this is not investment advice)

Learn about the latest at CrewAI by following their founder, João Moura, on X/Twitter.